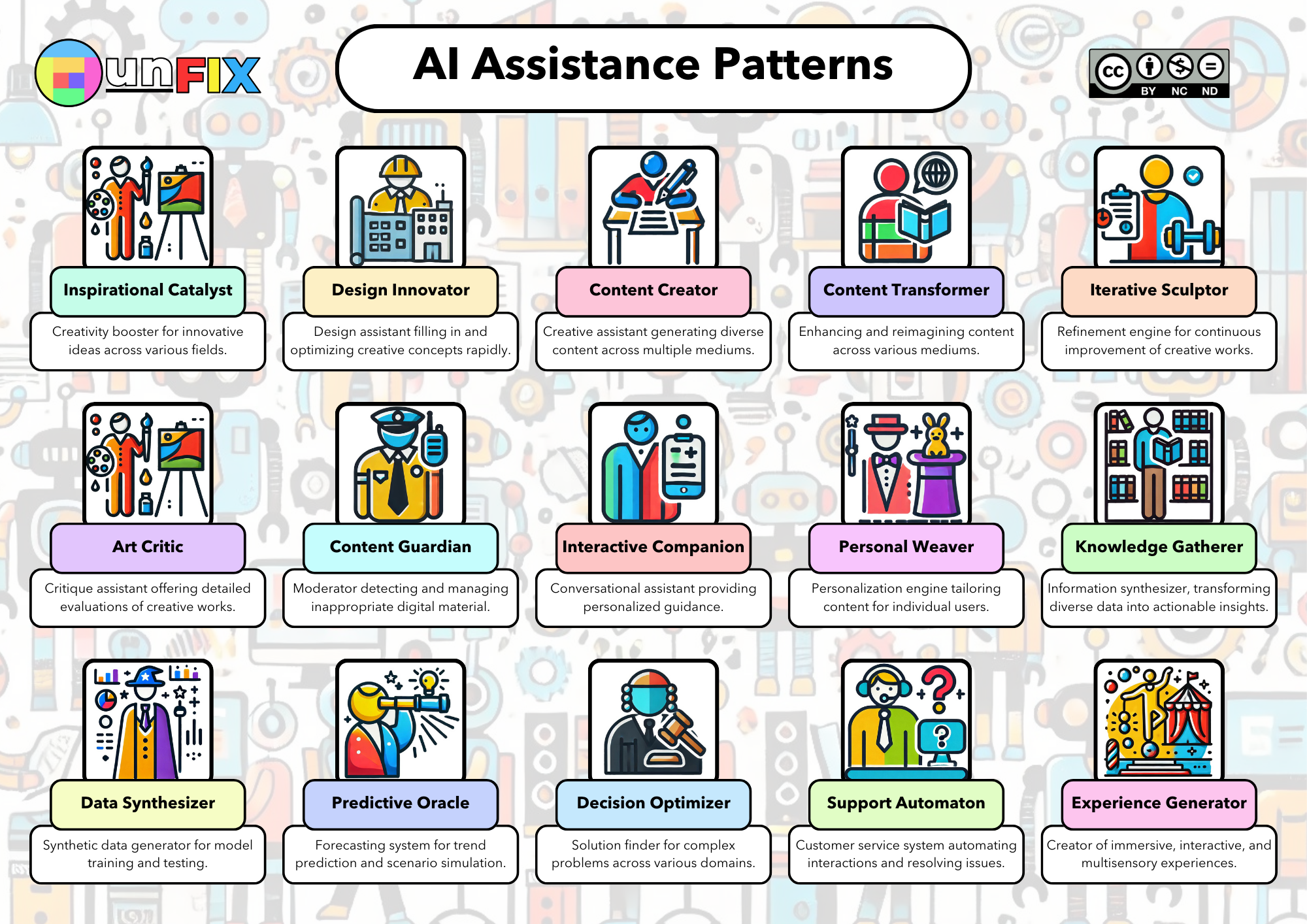

The Content Guardian

We’ve uncovered fifteen AI use case patterns (there are probably more) and given each one a name. This is the seventh of the fifteen.

Moderator detecting and managing inappropriate digital material.

While people worry about misbehaving AIs, the AIs are already dealing with misbehaving people. The Content Guardian pattern is how we refer to the use case of detecting, managing, and filtering inappropriate or harmful content across various digital platforms.

Utilizing advanced natural language processing, image recognition, and behavioral analysis techniques, AIs can swiftly identify and address problematic content at scale. They can tackle challenges such as hate speech, cyberbullying, spam, and age-restricted content across diverse digital spaces. This pattern plays a crucial role in maintaining community standards, ensuring user safety, and fostering a positive online environment.

More examples:

A social media platform employs the Content Guardian pattern when it automatically detects and removes deep fake videos in real-time, preventing the spread of misinformation.

An online gaming community may use the pattern to monitor in-game chat, identifying and addressing instances of hate speech and cyberbullying.

A global e-commerce website could implement the Content Guardian to screen user-generated product listings and reviews, effectively filtering out counterfeit goods and fake reviews.

With the Content Guardian, the focus is on ensuring users adhere to the content policies set forth by platform owners and moderators.