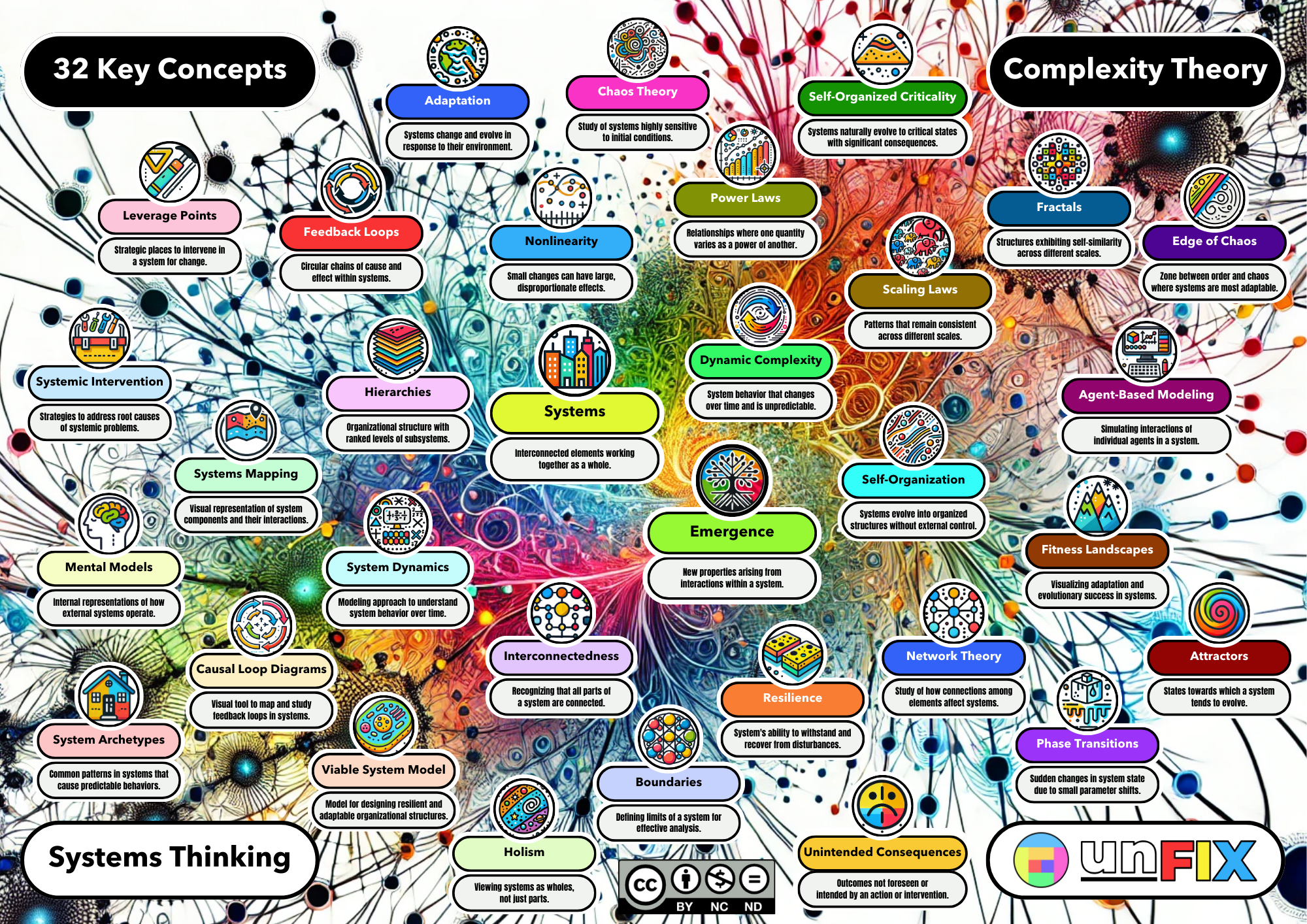

32 Key Concepts in Systems Thinking and Complexity Theory

Author: Jurgen Appelo

I’ve said it many times. Systems thinking and complexity science offer a solid foundation for Lean, Agile, and other modern approaches to organizational design and development. Concepts such as feedback loops, self-organization, and adaptation are taken straight from systems theory. But what are the other ideas? To my surprise, I could not find a satisfactory overview of the key insights of systems thinking and complexity theory. Having not much to do as I sheltered in my cabin from a terrible downpour in Grenada, I set out to make my own.

32 Key Concepts in Systems Thinking and Complexity Theory

(High-res PDF available for partners, facilitators and workshop attendees)

The Approach

Generative AI excels at identifying patterns in human discourse. While AIs cannot tell us what is true, they are very good at telling us what humans talk about (and thus believe to be true). Therefore, using AIs to generate a synthesis of conversations around systems theory was an obvious first step.

I asked both OpenAI’s ChatGPT 4o and Anthropic’s Claude 3.5 Sonnet to give me the fundamental concepts of systems thinking and the key concepts of complexity theory, as they can be found in public discourse. Then, I asked Google’s Gemini to merge the results and add what it believed to be missing. This resulted in two lists of system concepts: one for systems thinking and one for complexity science.

It was evident from the start that the two lists would overlap. Interconnectedness, resilience, and boundaries are some examples of concepts fundamental to both systems thinking and complexity science. However, a few ideas (such as holism and systemic interventions) are typically only discussed within systems thinking circles. In contrast, others (such as fractals, attractors, and fitness landscapes) are primarily topics in the domain of complexity theory.

My first thought was to visualize the results as a Venn diagram with two intersecting circles and hard boundaries between them. But then I reconsidered. Such a visual would inevitably lead to endless discussions about the “correct” placement of concepts. (“I disagree about where you placed Hierarchies in your diagram!”) This would only distract from my goal: to offer a coherent overview that can serve as a starting point for further exploration. Nothing more.

Then, I realized I could use an AI to weigh the relative prevalence of the 32 concepts in public discussions. In other words, I asked ChatGPT, “On a scale of 0 to 100, rate how often these concepts are discussed across the two domains of systems thinking versus complexity theory.” Instead of hard circles and boundaries, I prefer to visualize a complex web of ideas, with some leaning toward systems thinking and others leaning more toward complexity theory. No item is 100% aligned on the left or the right; most sit somewhere on a scale between the two extremes. It’s almost like politics! (Well, in most countries.)

Given my wide reading across both domains (I must have read a hundred books and a thousand articles on systems theories), I was pleased to see that ChatGPT’s results corresponded very well with my own experience. It was also nice to see confirmed that the two concepts of Systems and Emergence ended up smack in the middle.

Finally, it was easy (and fun!) to use DALL-E to generate visuals and then ask Claude, Gemini, and ChatGPT to help me write (and rewrite) 32 oneliners and descriptions. I used Canva to manually throw it all together and tweak the design until it looked as colorful as my face after a spicy Caribbean meal.

So, here it is: the result of one day of creative work between me and my small team of powerful AI assistants. I hope you find it helpful in exploring the theoretical underpinnings of Lean, Agile, and other forward-thinking management approaches.

Thirty-two Key Concepts

System Archetypes: 10 (Almost exclusively discussed in systems thinking)

Common patterns in systems that cause predictable behaviors.

System archetypes are recurring patterns of behavior found in various systems. They help identify underlying structures causing specific behaviors. These archetypes serve as diagnostic tools for understanding and addressing recurring systemic issues.

Example: Overfishing in international waters (“Tragedy of the Commons”)

VSM (Viable System Model): 10 (Almost exclusively discussed in systems thinking)

Model for designing resilient and adaptable organizational structures.

Cybernetician Stafford Beer developed the Viable System Model as a framework for understanding and designing complex organizations. It ensures that each part of the organization can survive independently while contributing to the overall system’s adaptability and resilience.

Example: A corporation structured with autonomous business units.

Causal Loop Diagrams: 15 (Primarily used in systems thinking)

Visual tool to map and study feedback loops in systems.

Causal loop diagrams illustrate the relationships and feedback loops within a system. They help visualize how different elements interact, revealing cycles of cause and effect that drive system behavior over time. These diagrams are also used to identify potential leverage points for system intervention.

Example: A thermostat regulating room temperature.

Mental Models: 20 (Primarily discussed in systems thinking, though relevant in complexity science)

Internal representations of how external systems operate.

Mental models are the assumptions and beliefs individuals hold about how systems function. These models influence decision-making and problem-solving, highlighting the importance of aligning perceptions with actual system dynamics. Challenging and updating mental models is a crucial aspect of systems thinking.

Example: Assuming the economy is driven by rational humans as decision-makers.

Holism: 20 (Primarily a systems thinking concept, though it underpins some complexity science discussions)

Viewing systems as wholes, not just parts.

Holism emphasizes understanding systems by looking at the whole rather than analyzing individual components in isolation. It recognizes that the properties and behaviors of the whole system emerge from the interactions of its parts. This concept is often contrasted with reductionism.

Example: Understanding a forest as one living ecosystem, not just separate trees.

Systems Mapping: 20 (Primarily a tool in systems thinking)

Visual representation of system components and their interactions.

Systems mapping involves creating diagrams that show the components of a system and how they interact. This helps in understanding the system’s structure, identifying key elements, and uncovering relationships and dependencies. This technique is also useful for identifying feedback loops and potential intervention points.

Example: Visualizing the entire supply chain (or web) for a product.

System Dynamics: 20 (Primarily a systems thinking methodology)

Modeling approach to understand system behavior over time.

System dynamics, pioneered by Jay Forrester at MIT, is a methodological approach to studying and modeling the behavior of complex systems over time. It uses tools like stock and flow diagrams and differential equations to simulate system responses to changes.

Example: Modeling the growth and decline of a business.

Systemic Intervention: 20 (Primarily a systems thinking concept)

Strategies to address root causes of systemic problems.

Systemic intervention involves designing and implementing actions that address the underlying causes of issues within a system. It focuses on long-term solutions rather than quick fixes, promoting sustainable improvements. This approach often involves multiple interventions at different leverage points.

Example: Addressing poverty by improving education and healthcare.

Boundaries: 25 (More emphasized in systems thinking but relevant to complexity science)

Defining limits of a system for effective analysis.

Boundaries determine what is included in a system and what is considered external. Setting boundaries is crucial for focusing analysis, understanding interactions, and ensuring relevant factors are considered in decision-making. In complex systems, these boundaries are often fuzzy or permeable.

Example: Defining the scope of a project within an organization.

Interconnectedness: 30 (Mostly discussed in systems thinking but relevant to complexity science)

Recognizing that all parts of a system are connected.

Interconnectedness highlights how elements within a system are linked and influence one another. Changes in one part can have ripple effects throughout the system, emphasizing the need for holistic thinking. This concept is fundamental to understanding emergent properties in systems.

Example: The impact of pollinators on crop growth.

Hierarchies: 30 (More commonly discussed in systems thinking, though relevant to complexity science)

Organizational structure with ranked levels of subsystems.

Hierarchies refer to the arrangement of systems and subsystems in ranked layers. Each level comprises lower-level components and is part of a higher-level system, helping manage complexity through nested organization. The concept of “holarchy” is often discussed as a more flexible form of hierarchy in systems thinking.

Example: Nested levels of government (local, state, federal).

Leverage Points: 35 (Mainly a systems thinking concept, though applicable in complexity science)

Strategic places to intervene in a system for change.

Leverage points are specific areas within a system where a small change can lead to significant impacts. Identifying these points helps design effective interventions to improve system performance and achieve desired outcomes. Donella Meadows’ work on leverage points is seminal in this area.

Example: Tax incentives to promote renewable energy.

Feedback Loops: 40 (Key in systems thinking but also significant in complexity science)

Circular chains of cause and effect within systems.

Feedback loops are cycles where outputs of a system are fed back as inputs. Positive feedback loops amplify changes, while negative feedback loops stabilize the system. Understanding these loops is crucial for predicting system behavior, and both are key to understanding system dynamics.

Example: Compound interest in a savings account.

Resilience: 40 (More commonly discussed in systems thinking, but also essential in complexity science)

System’s ability to withstand and recover from disturbances.

Resilience is the capacity of a system to absorb shocks and still maintain its core functions. Resilient systems can adapt to changes and recover from disruptions, ensuring long-term sustainability and stability. Resilience often involves diversity and redundancy in system components.

Example: A city’s ability to recover after a natural disaster.

Unintended Consequences: 40 (Discussed more in systems thinking but also significant in complexity science)

Outcomes not foreseen or intended by an action or intervention.

Unintended consequences are results of actions that were not anticipated. They highlight the complexity and interconnectedness of systems, emphasizing the need for careful consideration of potential ripple effects. These are often due to overlooking indirect effects or feedback loops in complex systems.

Example: Antibiotic resistance due to overuse.

Systems: 50 (Equally discussed in both systems thinking and complexity science)

Interconnected elements working together as a whole.

Systems are sets of interrelated components that interact to form a unified whole. Understanding systems involves studying how these elements influence one another and how the system functions as a coherent entity.

Example: The human body is a system of organs, fluids, and microbes.

Emergence: 50 (Equally discussed in both systems thinking and complexity science)

New properties arising from interactions within a system.

Emergence refers to the appearance of novel behaviors or properties in a system that are not present in the individual components. These emergent properties result from the interactions and relationships among the parts.

Example: The formation of a traffic jam from individual cars.

Dynamic Complexity: 55 (Slightly more emphasized in complexity science)

System behavior that changes over time and is unpredictable.

Dynamic complexity arises from the interactions and feedback loops within a system, leading to behavior that evolves and can be counterintuitive. Understanding this complexity is crucial for effective system management.

Example: Stock market fluctuations due to many factors.

Self-Organization: 60 (More central to complexity science but acknowledged in systems thinking)**

Systems evolve into organized structures without external control.

Self-organization is the process by which a system spontaneously forms patterns and structures through local interactions among its components. This phenomenon is observed in natural and social systems, leading to complex behavior.

Example: Birds flocking into complex formations.

Nonlinearity: 65 (Primarily discussed in complexity science but relevant to systems thinking)

Small changes can have large, disproportionate effects.

Nonlinearity refers to relationships within a system where the effect of a change is not proportional to its cause. This can lead to unexpected outcomes and highlights the complexity of predicting system behavior.

Example: A single scream sparking a large-scale panic in a crowd.

Adaptation: 70 (More central to complexity science, especially in the context of complex adaptive systems)

Systems change and evolve in response to their environment.

Adaptation is how systems adjust to environmental changes. This ability to evolve and learn from experiences is crucial for the survival and resilience of complex systems.

Example: People and societies adapting to climate change.

Network Theory: 70 (Mostly discussed in complexity science, though network thinking is relevant to systems thinking)

Study of how connections among elements affect systems.

Network theory examines the structure and behavior of networks formed by interconnected elements. It helps understand how the arrangement of connections influences the dynamics and properties of the system.

Example: Studying the spread of rumors in a social network.

Phase Transitions: 80 (More central to complexity science)

Sudden changes in system state due to small parameter shifts.

Phase transitions are points where a system undergoes a drastic change in state in response to a slight change in an external parameter. Examples include the transition from liquid to gas or the onset of social phenomena.

Example: A sudden bankruptcy of an organization after years of stability.

Chaos Theory: 85 (Primarily discussed in complexity science, particularly with sensitivity to initial conditions)

Study of systems highly sensitive to initial conditions.

Chaos theory explores how small differences in initial conditions can lead to vastly different outcomes in nonlinear systems. This sensitivity, known as the “butterfly effect,” makes long-term prediction difficult but helps understand complex, dynamic behavior.

Example: The unpredictable trajectory of a tossed coin.

Attractors: 85 (Primarily discussed in complexity science)

States towards which a system tends to evolve.

Attractors are patterns or states a system naturally gravitates towards over time. They can be fixed points, cycles, or more complex structures, helping to describe and predict system behavior.

Example: A product development team settling into predictable behaviors.

Fitness Landscapes: 85 (Primarily discussed in complexity science, particularly in evolutionary theory and optimization studies)

Visualizing adaptation and evolutionary success in systems.

Fitness landscapes are conceptual models that show how different genotypes or phenotypes fare regarding reproductive success. They help understand adaptation and evolution by mapping the relationship between traits and fitness.

Example: Visualizing optimal beak shapes for different bird diets.

Scaling Laws: 85 (Primarily discussed in complexity science)

Patterns that remain consistent across different scales.

Scaling laws describe how specific properties of a system change with size. These laws reveal consistent patterns across different scales, providing insights into the underlying principles governing scalable complex systems.

Example: City sizes and infrastructure scaling predictably.

Power Laws: 85 (Primarily discussed in complexity science)

Relationships where one quantity varies as a power of another.

Power laws describe how certain quantities vary in relation to another, often observed in natural and social phenomena. They help explain distributions and patterns, such as the frequency of events or sizes of cities.

Example: Word frequency distribution in a large text corpus.

Self-Organized Criticality: 90 (Primarily discussed in complexity science)

Systems naturally evolve to critical states with significant consequences.

Self-organized criticality describes how complex systems naturally evolve into a critical state where minor events can lead to large-scale consequences. This concept helps explain phenomena like avalanches or market crashes.

Example: A sandpile collapsing after reaching a critical slope.

Fractals: 90 (Mostly associated with complexity science)

Structures exhibiting self-similarity across different scales.

Fractals are complex structures that display similar patterns at different scales. They are found in natural phenomena, such as coastlines and tree branches, and help describe irregular and fragmented shapes in nature.

Example: The branching patterns of a snowflake.

Agent-Based Modeling: 90 (Primarily associated with complexity science, though it can be relevant to systems thinking in certain contexts)

Simulating interactions of individual agents in a system.

Agent-based modeling is a computational approach to simulating the actions and interactions of autonomous agents. It helps study the emergent behavior of complex systems and assess the impact of individual actions on the whole system.

Example: Simulating crowd behavior in a pandemic.

Edge of Chaos: 90 (Primarily discussed in complexity science)

Zone between order and chaos where systems are most adaptable.

The edge of chaos refers to the transitional area between order and disorder where complex systems exhibit maximum adaptability and creativity. This concept highlights the balance needed for optimal system performance and evolution.

Example: A company exploring and innovating while maintaining structure and stability.

There you have it: the thirty-two key concepts as discussed in systems thinking and complexity theory. These topics will be part of our future work, and we will often refer back to this list. The first appearance is on our online course “taming wicked problems”.